In a previous post, A Guide to STMicroelectronics' Edge AI Suite Tools , I provided an overview of the tools in STMicroelectronics' Edge AI Suite. In this post, we'll focus on one such tool— ST Edge AI Developer Cloud— and walk through how to use it to test machine learning models.

ST Edge AI Developer Cloud is a web-based tool that allows you to test machine learning models by remotely accessing ST boards. It enables simulation of deployment and performance testing without the need to purchase any physical boards.

This guide outlines each step required to use the Developer Cloud, with screenshots provided to show the exact process. A similar walkthrough is also available in a video on the STMicroelectronics YouTube Channel.

Walkthrough

1. Accessing Edge AI Developer Cloud

Visit the ST Edge AI Developer Cloud and click "Start Now" on the landing page.

2. Sign in or Create an Account

To use the tool, log into your myST account or create one if you haven't already.

3. Import a Model

Import a model from your device or from the ST Model Zoo. For this example, I will use the first "Hand Posture" model that appears in the Model Zoo. Once selected, click "Start" next to the imported model.

4. Choose Platform & Version

Select a version and platform to use. For this demonstration, I will use the default version, ST Edge AI Core 1.0.0, and select STM32 MPUs as the target platform.

5. Quantize the Model

Click "Launch Quantization". You may also upload a .npz file for accuracy evaluation.

After quantization, click "Go Next" to move on to the Optimization stage.

6. Optimize the Model

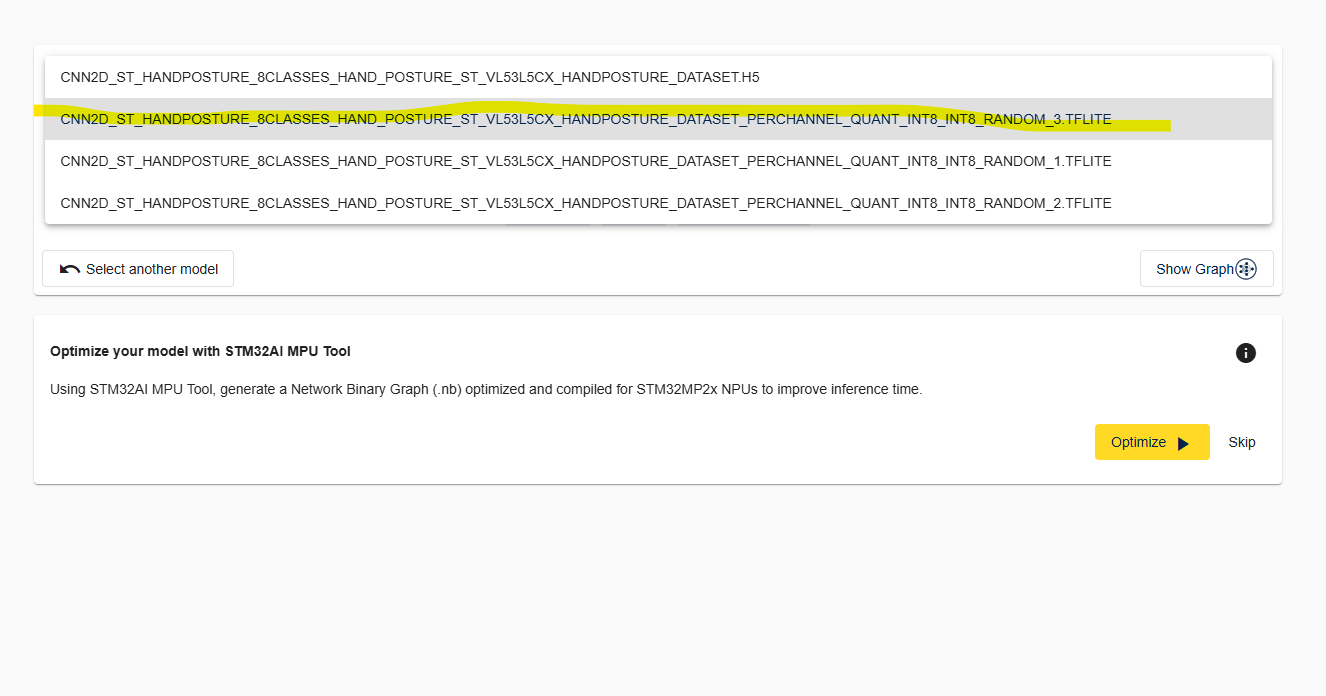

In the Optimization section, select a .tflite file from the model selector at the top,

then click "Optimize".

Once the model has been optimized, click "Continue", which will appear next to the "Optimize" button.

7. Benchmark Across Boards

Click "Start Benchmark" for all available boards. This will remotely run inference on a physical board and display the inference time once complete. Afterwards, click "Go Next" above the boards.

8. View Results

Under the "Results" section, you can view metrics such as weight size, performance, and resource utilization.

The "Show details per layer" option shows the resource utilization on the selected board, and the "Show performance summary" option compares inference times across all tested boards. After reviewing the results, click "Go Next".

9. Generate Code

Based on the benchmark results, generate code tailored to the optimal board. In this example, we will select the STM32MP135F-DK board, as it showed the fastest inference time. To view the timings, refer to the "Show Performance Summary" graph from the "Results" section.

Conclusion

The ST Edge AI Developer Cloud is a powerful testing environment for optimizing AI models on ST hardware. By allowing developers to evaluate boards remotely, it streamlines the deployment process and speeds up decision-making when selecting the best platform for your machine learning applications.